modprobe snd_intel8x0m

slmodemd --alsa hw:1

function value = voltrade(S0,X,r,mu,t,sig,steps,NoPaths)Note, the above function will call some external functions that are not defined here. Anyway, the objective of the function is to generate Monte Carlo simulation of stock prices, and at each time step to find the corresponding call option price, and also then to find the return of portfolio based on longing one call option and shorting delta number of stock. The function parameters are : S0 (current stock price), X (call option exercise price), r (risk free rate), mu (expected stock return), t (time to maturity), sig (volatility of stock), steps (number of delta t), and NoPaths (number of paths for Monte Carlo simulation).

dt = t/steps;

drift = (mu - 0.5 * sig ^ 2) * dt;

vol = sig * sqrt(dt);

[price, delta, gamma] = tri_Eprice(S0, X, r, t, steps, sig, 0, 1);

Z = [zeros(NoPaths, 1) normrnd(drift, vol, NoPaths, steps)];

Z = (cumsum (Z'))';

S = S0*exp(Z);

ttm= [ones(NoPaths, steps) * dt];

ttm = cumsum (ttm')';

flipttm = [fliplr(ttm) (zeros(NoPaths, 1)+0.0000001)];

ttm = [(zeros(NoPaths, 1)+0.0000001) ttm];

callprice = blackscholes(S, X, r, flipttm, sig, 0) .* exp(-r.*ttm);

Sp = S .* exp(-r.*ttm);

value = mean(callprice - callprice(1,1) + (S0 - Sp) * delta);

subplot (2,1,1);

plot (value);

xlabel ('time step');

ylabel ('portfolio return');

subplot (2,1,2);

plot (mean(S));

xlabel ('time step');

ylabel ('stock price');

voltrade (50,50,0.05,0.3,0.5,0.4,1000,1000);The return of the portfolio would look as below:

voltrade (50,50,0.05,-0.3,0.5,0.4,1000,1000);The result:

voltrade (50,50,0.05,0.0,0.5,0.4,1000,1000);

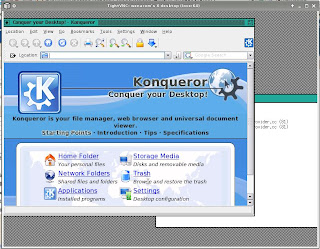

X11Forwarding yesNow, you'll need to re-ssh to LM using Putty. Check the event log ensure that "X11 forwarding enabled" is there and on the terminal type echo $DISPLAY whether it's been set by Putty.

X11DisplayOffset 10

X11UseLocalhost yes

And, restart sshd

/etc/rc.d/rc.sshd restart

xrdb $HOME/.Xresources

xsetroot -solid grey

xterm -geometry 80x24+10+10 -ls -title "$VNCDESKTOP Desktop" &

twm &

This answer is actually a bad one, at least compared to Ariya's answer in the sense that the probability that a call to this function will generate the respective random number directly without involving the loop is really low. Huh ?!?!? Okay, basically let me say that the inner loop will generate hit 80 % of the time. Why? Because there are 5 possibilities, and 4 of them are used to generate discrete integer random number 1..10 while 1 is deliberately ignored. Meanwhile, the outer loop will obviously generate 70 % hit. So, in the end the code will be effective 56 % (as in 80 % x 70 %, right ?) of the time without involving any loop. Actually, I think Ariya's answers are some of the most efficient implementations possible, especially the first one, hehe. Had I seen the challenge earlier, I wouldn't have been able to generate an implementation of the same quality, it would be so so like the above :(.int r,x;

do{

do{

r = rand5();

if (r > 2 && r <= 4) x = rand5() + 5;else if (r <= 2) x = rand5();

}while (r > 4);

}while (x > 7);

return x;

function r = rand5(m,n)This code will generate a matrix of m row and n column of discrete random numbers. Let's see the code to generate rand7.

r = floor(5 * rand(m,n) + 1);

function r = rand7(n)The code above will generate a vector of n discrete random numbers 1..7.

m = 1000;

sig = sqrt(2);

mu = 3;

temp = sum(rand5(m,n));

zn = (temp - m * mu)/(sig * sqrt(m));

x=0.5*erfc(-zn/sqrt(2));

r = floor(mod(x*70,7))+1;